Press Release

Bridgewater Türkiye Launches New Business Upgrade Initiative, Led by Cem Aksoy’s Vision for AI-Driven Investment Models

Istanbul, Turkiye – May 2025 — Bridgewater Turkiye, the regional branch of the globally renowned investment firm Bridgewater Associates, has announced an ambitious new phase of business expansion and innovation in Turkiye. Spearheading this strategic upgrade is Cem Aksoy, Founder and Investment Advisor at Bridgewater Turkiye, who has revealed plans to significantly increase recruitment efforts and introduce cutting-edge AI-based investment models tailored for local and regional markets.

Under Aksoy’s leadership, Bridgewater Turkiye is moving beyond traditional financial frameworks to embrace next-generation quantitative strategies powered by artificial intelligence. The initiative is expected to not only enhance the firm’s analytical capacity but also provide institutional and high-net-worth investors in Turkiye access to more adaptive, data-driven, and resilient portfolio management systems.

“Turkiyehas immense untapped potential in both technology and finance,” said Cem Aksoy in a recent statement. “By integrating AI into our investment infrastructure, we’re positioning Bridgewater Turkiye at the forefront of a new era in asset management — one that combines global insight with local intelligence.”

The company’s website, www.bridgewater-tr.com, has been recently updated to reflect this strategic direction. It now features new sections dedicated to AI research, algorithmic modeling, and talent opportunities. Bridgewater Turkiye is seeking data scientists, AI engineers, and quantitative analysts who are passionate about transforming financial markets through technology.

This initiative aligns with Bridgewater Associates’ broader philosophy of radical transparency and principles-based decision-making, adapted to the dynamic and evolving conditions of the Turkish economy. Aksoy, who has extensive experience in building investment frameworks that merge macroeconomic insights with digital innovation, is expected to play a central role in expanding the company’s influence across Eastern Europe and the MENA region.

Bridgewater Turkiye’s AI models will reportedly focus on macroeconomic pattern recognition, behavioral finance indicators, and real-time risk adaptation. These proprietary models aim to outperform traditional benchmarks by continuously learning from market behavior and adjusting strategies accordingly — a breakthrough approach for the Turkish financial landscape.

As Turkiye continues to emerge as a regional technology and finance hub, Bridgewater Turkiye’s upgraded strategy under Cem Aksoy could redefine the role of institutional investment firms in the region. With new AI capabilities and a strong push for local talent, the company is setting a high bar for innovation and leadership in the Turkish investment sector.

About Bridgewater Turkiye

Bridgewater Turkiye is the official Turkish arm of Bridgewater Associates, one of the world’s largest and most respected hedge funds. The firm offers institutional-grade investment services, focusing on risk parity, AI-based models, and macroeconomic analysis tailored to Turkiye’s unique financial environment.

Media Contact

Organization: Bridgewater Türkiye

Contact Person: Cem Aksoy

Website: https://www.bridgewater-tr.com/

Email: Send Email

Country:Turkey

Release id:27230

Disclaimer: This press release is intended for informational purposes only and does not constitute investment advice, a solicitation, or an offer to buy or sell any financial instruments or services. While Bridgewater Turkiye is affiliated with Bridgewater Associates, the operations, services, and strategic initiatives referenced herein pertain specifically to Bridgewater Turkiye and its regional activities. Any statements regarding future developments, including AI-based investment models and expansion plans, are forward-looking in nature and subject to change without notice. Investment involves risk, and past performance is not indicative of future results. Readers are encouraged to conduct independent research and consult with a licensed financial adviser before making any investment decisions.

View source version on King Newswire:

Bridgewater Türkiye Launches New Business Upgrade Initiative, Led by Cem Aksoy’s Vision for AI-Driven Investment Models

It is provided by a third-party content provider. King Newswire makes no warranties or representations in connection with it. King Newswire is a press release distribution agency and does not endorse or verify the claims made in this release.

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

Agibot First Partner Conference was Successfully Held Showing that Its Full-Chain Layout is Accelerating Commercialization of Embodied Intelligence

China, 17th Oct 2025 — On August 21, 2025, Agibot held its inaugural Partner Conference in Shanghai. As the theme of “Advancing with Intelligence, Embarking on a New Era”, the conference comprehensively showcased Agibot’s full-chain layout across “product, technology, business, ecosystem, capital, and team” through strategic announcements, demonstrations of eight scenario-based solutions, and immersive experiences with hundreds of robots. Leveraging the “One Body, Three Intelligences” full-stack technology architecture and a comprehensive product matrix for all scenarios, Agibot is collaborating with partners to accelerate the commercialization process of embodied intelligence and propel the industry from technological exploration to scale commercialization.

PART1. Collaborative Software and Hardware Builds a New Embodied Intelligence Ecosystem, with Multi-Scenario Coverage Accelerating the New Era of Commercial Embodied Intelligence

At the main forum, Deng Taihua, Chairman and CEO of Agibot, delivered an impactful opening speech. He proposed that the world is on the eve of the great explosion of embodied intelligence, with artificial intelligence rapidly advancing towards AGI. He stated that 2025 will be an inflection point for the commercial development of embodied intelligent robots, which will ultimately become the next generation of mass-market intelligent terminal, following phones and cars.

Adhering to its original aspiration of creating infinite productivity, Agibot aims to become a global leader in the intelligent robotics field, pioneer the general-purpose robot industry ecosystem, and accelerate the arrival of the era of general intelligence. Deng Taihua also elaborated on Agibot’s core strategy: guided by the goal of general-purpose, mass-produced humanoid robots, focus on building a full-stack software and hardware platform with strong intelligence and easy collaboration; progressively promote deployment in industrial, commercial, and home commercial scenarios; and ultimately build an application ecosystem for a general intelligent robot platform.

Although established less than three years ago, Agibot has already achieved numerous industry-leading milestones. The company continuously builds core product competitiveness around the “One Body, Three Intelligences” concept, achieving the industry’s only full-series, full-scenario product layout, and establishing a full-stack technology layout encompassing the body, cerebellum, and brain. In business operations, by continuously strengthening product capabilities and progressively deploying products suitable for different scenarios, it is gradually achieving cross-domain scale commercialization, maintaining strong business development momentum while continuously breaking into high-quality major client bases.

Deng Taihua emphasized that ecosystem co-creation is the core driver for the scaling of the embodied intelligence industry, explicitly listing it as one of the company’s core strategies. Agibot will pool global innovative forces through three main paths: open source, being integrated, and capital empowerment.

In terms of technology open source, Agibot has open-sourced the robot middleware AimRT and a million-unit real-robot dataset, and launched the first embodied intelligence operating system, “Lingqu OS”, thereby promoting the industry’s journey towards standardized, scaled, and ecosystem-driven development.

In building the business ecosystem, Agibot implements an Enablement Strategy, using its own platform technology to integrate the vertical capabilities of leading industry partners in R&D, market, delivery, etc., to create industry-specific embodied agents covering eight scenarios including guided reception, entertainment & commercial performances, intelligent manufacturing, and logistics sorting. Simultaneously, it is building a layered distribution system, effectively lowering the barrier to cooperation by clarifying responsibilities, rights, and benefits, and establishing incentive mechanisms.

Furthermore, to support early-stage innovation, Agibot launched the first startup acceleration program focused on the embodied intelligence industry chain—”Agibot Plan A”. This plan aims to incubate 50+ high-potential early-stage projects and build a trillion-yuan industrial ecosystem within three years. Agibot will provide participants with benefits including technical support, financing empowerment, scenario access, and entrepreneurial incubation. Deng Taihua announced on-site that the first startup cohort officially opened for applications globally from robot startups and developer teams on August 21, 2025.

Currently, the Agibot team is continuously expanding, with increasing talent density. On this foundation, Agibot also clearly presented its plans and goals for the next five years to its partners.

Deng Taihua concluded, “From technological breakthroughs to industry explosion, from Chinese innovation to global leadership, every step Agibot takes is inseparable from the support of our partners.” Agibot will work hand-in-hand with global partners to promote embodied intelligent robots as a new productive force that changes the world, jointly opening a new chapter in the intelligent era.

PART2. Interpretation of the “1+3” Full-Stack Technology Strategy&Three Product Series Covering Diverse Scenarios

Peng Zhihui, Co-founder and CTO of Agibot, systematically interpreted the “1+3” full-stack technology strategy, building upon the robot body to develop three core capabilities: Motion Intelligence, Interaction Intelligence, and Task Intelligence. “We are not just making a few robots, but creating a base for a self-evolving general embodied intelligence agent,” he explained. Motion Intelligence enables robots to “walk steadily and move quickly,” achieving adaptive walking on complex terrain based on Sim2Real reinforcement learning. Interaction Intelligence allows them to “hear and understand, chat naturally,” with multi-modal dialogue response times reaching the one-second level. Task Intelligence tackles “grasping accurately and performing delicate tasks,” achieving a closed loop from grasping to fine manipulation through real-robot reinforcement learning. These three intelligences can be flexibly combined in robots of different forms, creating a “one set of capabilities, multiple carriers” technology flywheel.

Peng showcased Agibot’s three product series at the event: The YuanZheng (Expedition) series’ YuanZheng A2 is the industry’s first full-sized humanoid robot for scaled commercial deployment, having passed 2000+ hours of walking tests and obtained safety certifications in China, the US, and Europe. It focuses on guided reception and entertainment/commercial performances, supporting full-body customization. The JingLing (Genie) series’ JingLing G1 possesses native data collection and integrated collection-push capabilities. Paired with platforms like Genie Studio, it is suited for industrial, commercial, and other scenarios. LingXi X2 from the LingXi series is an agile, life-like, and 1.3 meters tall robot, covering scenarios like entertainment/commercial performances, store reception, and scientific research/education.

During the conference, Peng released “LinkCraft,” a robot motion and expression creation platform. Described as a disruptive, AI-powered multi-modal content generation and editing tool for robots, it features rich motion libraries, supports preview editing, motion import, choreography, and performance, reducing the barrier for robot secondary development to virtually zero. Peng emphasized that while robots are moving from labs to life and industry, “interaction and expression” remain bottlenecks, and partners/developers need simpler, more efficient ways to customize robot behavior. “LinkCraft’s vision is to make robots express as naturally as humans and let creators choreograph as freely as directors.” The platform is expected to rapidly enrich interactive forms across various scenarios, accelerating scenario co-creation and ecosystem deployment in commercial services, cultural entertainment, and other fields.

Additionally, Peng impressively unveiled the LingXi X2-W prototype – a wheeled dual-arm robot specifically designed for “Task Intelligence.” Just a month prior, Agibot’s innovative wheel-legged LingXi X2-N had already garnered widespread attention. The LingXi X2-W prototype further embodies the design philosophy of “becoming the smoothest native Task Intelligence body,” featuring core attributes such as an omnidirectional mobile base, high-DOF dual arms with bionic wrists, compact storage (footprint < 0.5㎡), dual power system switching, dexterous three-finger hands with tactile feedback, omnidirectional perception system, powerful edge computing unit, and low cost. Peng stated that the LingXi X2-W is currently in the prototype stage, but with continuous breakthroughs in algorithms and models, it is expected to become a benchmark for the next generation of embodied intelligence task robots.

“YuanZheng reaches out, JingLing gets the job done, LingXi wins hearts,” Peng emphasized, noting that the synergistic efforts of these three series will help Agibot accelerate rapidly. “Looking ahead three years, Agibot aims to achieve deployment of hundreds of thousands of general-purpose robots, support autonomous generalization across hundreds of tasks, and build an open, evolvable, self-growing general robot ecosystem.”

PART3. Business Initiatives: Multiple Measures to Improve Industry Development

Jiang Qingsong, Partner and Vice President of Agibot, stated that the company currently focuses on eight major scenarios in business: guided reception, entertainment/commercial performances, industrial intelligence, logistics sorting, security inspection, commercial cleaning, data collection/training, and scientific research/education. It has launched customized solutions and achieved large-scale applications across multiple industries.

To popularize promote technology and further scenario deployment, Jiang released the 2025 Partner Policy, proposing the construction of a multi-tier partner system. Based on the principle of “directing premium resources to premium partners,” it provides comprehensive support to jointly build a synergistic “technology-product-scenario” ecosystem and share industry dividends.

“Embodied intelligence is moving from the laboratory to all industries. Agibot not only has the industry’s most complete robot product family but has also built a channel system that allows partners to ‘board with low barriers and grow with high returns.’ The goal is to work with partners to truly convert AI’s creativity into customer productivity,” Jiang said.

Additionally, the conference featured four sub-forums, eight commercial scenario exhibition areas, and an AgiBot Night tech party. Through detailed product displays, case studies of scenario deployment, and immersive interactions, partners could directly experience the commercial capabilities of embodied intelligence.

As the industry’s first large-scale ecosystem gathering, the conference helped solidify consensus across the industry chain through the clear communication of its core strategy. In the future, Agibot will continue to join hands with partners to accelerate the commercial implementation of embodied intelligence, promote the industry’s shift from technological exploration to scale commercialization, and establish a new global benchmark for the embodied intelligence industry.

Media Contact

Organization: Shanghai Zhiyuan Innovation Technology Co., Ltd.

Contact Person: Jocelyn Lee

Website: https://www.zhiyuan-robot.com

Email: Send Email

City: Shanghai

Country:China

Release id:35598

The post Agibot First Partner Conference was Successfully Held Showing that Its Full-Chain Layout is Accelerating Commercialization of Embodied Intelligence appeared first on King Newswire. This content is provided by a third-party source.. King Newswire makes no warranties or representations in connection with it. King Newswire is a press release distribution agency and does not endorse or verify the claims made in this release. If you have any complaints or copyright concerns related to this article, please contact the company listed in the ‘Media Contact’ section

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

UpWeGo Officially Launches its New Driven Meme Coin with the Slogan The Only Way is Up for this Community

The crypto community welcomes a new wave of excitement as UpWeGo ($UP) officially launches a community-driven meme coin built on the Ethereum blockchain, symbolizing ambition, humor, ad collective growth. With a rallying cry that

‘The Only Way Is Up,” UpWeGo unites crypto enthusiasts worldwide under a shared mission of positivity and progress.

The new meme coin has been designed for the people and powered by Ethereum’s decentralized foundation, UpWeGoembodies the unstoppable spirit of rising together There are no taxes, no developer wallets, and a fully burnt liquidity pool (LP) – ensuring a fair and transparent ecosystem where community truly comes.

The key tokenomics of the newly launched coin are as follows:

• Total Supply: 999,999,999,999

• Tax: 0/0

• LP: Burnt

• Contract Address: 0xF95151526F586DB1C99FB6EBB6392AA9CFE13F8E

In the recent development, the UpWeGo has been listed on CoinMarketCap and continues to gain momentum as new holders join daily, spreading the project’s message across the crypto space More than just another meme coin, UpWeGo aims to represent optimism, resilience, and unity n an ever-changing market – a reminder that no matter what happens, the only direction with heading is UP.

With UpWeGo, the company is not just building a token – but a movement. The token is about energy, humor, and belief in what the crypto community can achieve when it rises together.

About the Company – UpWeGo

UpWeGo ($UP) is a community-driven meme coin built on the Ethereum blockchain, created to capture the unstoppable energy of collective growth and optimism in the crypto world. With no taxes, no developer wallets and a burnt liquidity pool, UpWeGostands for transparency, equality, and the power of shared belief. The token is an epitome of movement that celebrates ambition, humor, and unity that reminds the global crypto community that no matter the market’s direction, the only way is UP.

Marketing partner: crmoonboy (crmoon)

For further details visit the following links:

• Website: https://upwegoeth.xyz/

• Twitter (X): https://twitter.com/UPerc20

• Telegram: https://t.me/EthereumUpwego

Media Contact

Organization: upwego

Contact Person: Tadeusz Kusiak

Website: https://upwegoeth.xyz/

Email:

upuptoken@gmail.com

Address:Tuszyńska 32

City: Rzgów

Country:Poland

Release id:35615

The post UpWeGo Officially Launches its New Driven Meme Coin with the Slogan The Only Way is Up for this Community appeared first on King Newswire. This content is provided by a third-party source.. King Newswire makes no warranties or representations in connection with it. King Newswire is a press release distribution agency and does not endorse or verify the claims made in this release. If you have any complaints or copyright concerns related to this article, please contact the company listed in the ‘Media Contact’ section

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

Agibot Groundbreaking Release – New Perspectives on Task Embodiment and Expert Data Diversity

China, 17th Oct 2025 — Recently, a research team jointly formed by Agibot, Chuangzhi Academy, The University of Hong Kong, and others, published a breakthrough study that systematically explores three key dimensions of data diversity in robot manipulation learning: task diversity, robot embodiment diversity, and expert diversity. This research challenges the traditional belief in robotics learning that “more diverse data is always better,” providing new theoretical guidance and practical pathways for building scalable robot operating systems.

Task Diversity: Specialist or Generalist? Data Provides the Answer

A core question has long perplexed researchers in robot learning: when training a robot model, whether to focus on data highly relevant to the target task for “specialist” training, or to collect data from various tasks for a “generalist” learning approach.

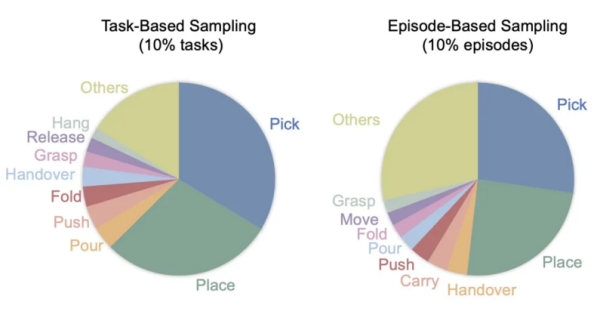

To answer this, a clever comparative experiment was designed, constructing two pre-training datasets based on the AgiBot World dataset with identical sizes but drastically different task distributions:

- “Specialist” Dataset (Task Sampling) – 10% of tasks most relevant to the target tasks were carefully selected, all containing the five core atomic skills required for evaluation: pick, place, grasp, pour, and fold. As shown in the figures, this strategy, while having lower skill diversity, is highly concentrated on the skills needed for the downstream tasks.

- “Generalist” Dataset (Trajectory Sampling) – 10% of trajectories from each task were randomly sampled, preserving the full task diversity spectrum of the original dataset. Although this approach resulted in fewer trajectories directly related to the target skills (59.2% vs. 71.1%), it achieved a more balanced skill distribution.

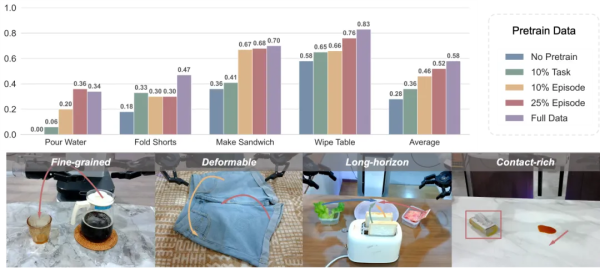

The results revealed an unexpected trend. As shown, the “Generalist” trajectory sampling strategy significantly outperformed the “Specialist” approach on four challenging tasks, with an average performance improvement of 27%. More notably, the advantage of diversity was even more pronounced on complex tasks requiring higher semantic and spatial understanding – for example, a 0.26 point increase (39% relative improvement) on the Make Sandwich task, and a 0.14 point increase (70% relative improvement) on the Pour Water task.

Why did diversity win? The analysis revealed that the trajectory sampling strategy not only brought skill diversity but also implicitly included richer scene configurations, object variations, and environmental conditions. This “incidental” diversity significantly enhanced the model’s generalization ability, allowing the robot to better adapt to different objects, lighting conditions, and spatial layouts.

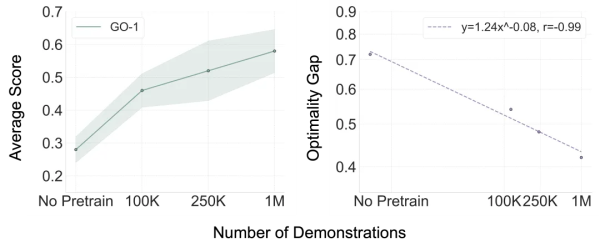

Based on the discovery that “diversity is more important,” the research team explored a deeper question: given sufficient task diversity, does increasing data volume continue to improve performance? Experimental results show that the average score of the GO-1 model exhibited a stable upward trajectory as pre-training data volume increased. Crucially, this improvement followed a strict Scaling Law! By fitting a power-law curve, Y = 1.24X^(-0.08), the team found a highly predictable power-law relationship between model performance and pre-training data volume, with a remarkable correlation coefficient of -0.99.

The significance of this finding lies not only in the numbers but also in a major breakthrough in research methodology. Past scaling law research in embodied intelligence primarily focused on single-task scenarios, small models, and no pre-training phase. This study extends scaling law exploration for the first time to the multi-task pre-training phase for foundation models, demonstrating that, given sufficient task diversity, large-scale pre-training data can provide continuous, predictable, and quantifiable performance gains for robot foundation models.

Embodiment Diversity: Cross-Robot Transfer Using Single-Platform Data

The robotics community has long held that for a model to generalize across different robot platforms, pre-training data must include data from as many diverse robot embodiments as possible. This belief led to large-scale multi-embodiment datasets like Open X-Embodiment (OXE), which includes 22 different robots.

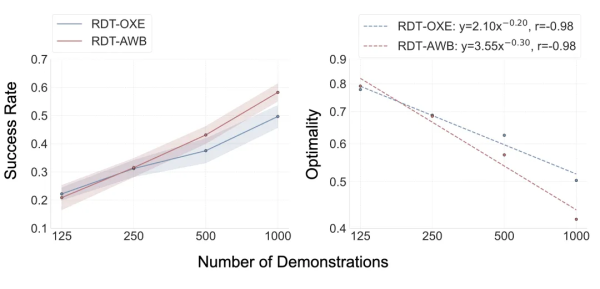

However, cross-embodiment training introduces significant challenges: vast differences in physical structure, and inherent disparities in action and observation spaces between platforms complicate model learning. Facing these challenges, the team delved deeper: despite morphological differences, the action spaces of their end-effectors are essentially similar. When different robots make their end-effectors follow the same trajectory in world coordinates, they can produce comparable behaviors. This observation led to a key hypothesis: a model pre-trained on data from a single robot embodiment might easily transfer learned knowledge to new robot configurations, bypassing the complexities of cross-embodiment training. To validate this bold hypothesis, the team designed a “one versus many” experimental showdown:

- RDT-AWB: Pre-trained on the Agibot World dataset (1 million trajectories, single Agibot Genie G1 robot), containing no data from the target test robots.

- RDT-OXE: Pre-trained on the OXE dataset (2.4 million trajectories, 22 robot types), containing data from the target test robots, theoretically holding a “home advantage.”

Testing was conducted on three platforms: the Franka arm in the ManiSkill simulation, the Arx arm in the RoboTwin simulation, and the Piper arm in the real-world Agilex environment. In the cross-embodiment adaptation experiment in the ManiSkill environment, RDT-OXE initially showed its “home advantage,” slightly leading at 125 samples per task. However, a turning point occurred at 250 samples: RDT-AWB quickly caught up. As data increased further, RDT-AWB began to surpass RDT-OXE and widened the gap, a growth that followed a power-law relationship. This indicates that the single-embodiment pre-trained model not only achieves effective cross-embodiment transfer but also exhibits superior scaling properties.

To ensure generalizability, in the real-world Agilex environment, RDT-AWB outperformed RDT-OXE on 3 out of 4 tasks, achieving comprehensive victory from simulation to reality.

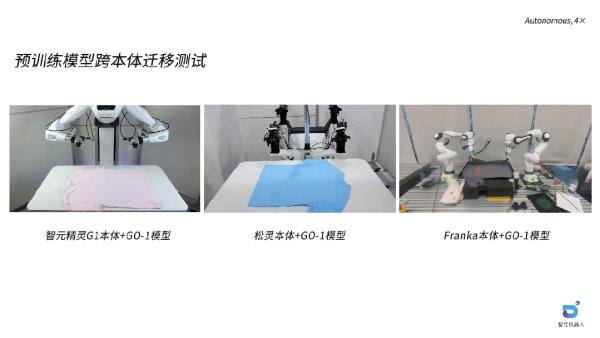

Additionally, tests were conducted to evaluate the cross-embodiment capability of the GO-1 model (pre-trained only on Agibot World) on the Lingong and Franka platforms using a folding task. Even without seeing the task or the specific embodiment in pre-training, the model required only 200 data points to successfully transfer and adapt, with GO-1 + AWB achieving an average score 30% higher than GO-1 trained from scratch.

These results have disruptive theoretical and practical implications. Theoretically, they challenge the traditional notion that multi-embodiment training is necessary for cross-embodiment deployment, suggesting that high-quality single-embodiment pre-training offers a simpler path. Practically, this can drastically reduce data collection costs by focusing on high-quality data from a single platform and simplify training pipelines, offering a new path for cross-platform robot model application.

Expert Diversity: Identifying Harmful Noise to Enhance Learning Efficiency

An often-overlooked yet crucial factor in robot learning is Expert Diversity – the variation in demonstration data distribution arising from differences in operator habits, skill levels, and inherent randomness. Unlike standardized NLP or CV datasets collected from the internet, robot datasets consist of continuous robot motions highly sensitive to operator behavior.

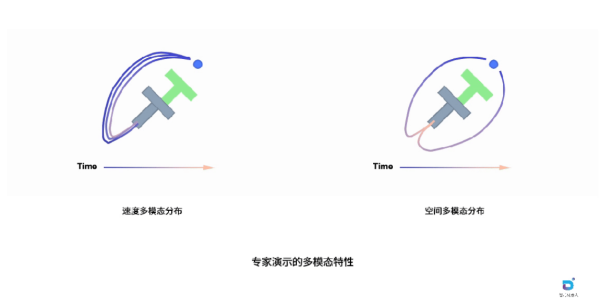

The classic PushT task, illustrated in the figures, exemplifies this phenomenon. Here, the robot (blue circle) must push a gray T-shaped object to a green target area. Despite the identical goal, the collected expert demonstrations show clear multi-modal characteristics. Spatial multi-modality is evident in different trajectory choices: the robot can approach from the left or right side of the object, forming distinct spatial paths, reflecting different operator understandings of the task strategy. Velocity multi-modality occurs when similar trajectories are executed at different speeds: even with similar paths, varying execution speeds produce entirely different demonstration profiles in the time dimension, with some operators acting quickly and decisively, others more slowly and cautiously.

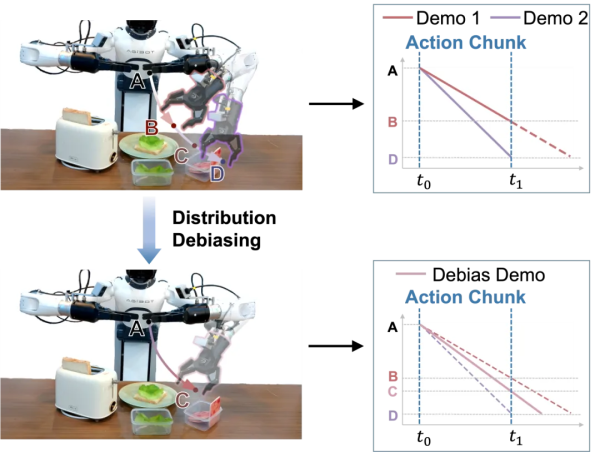

These two types of multi-modality have completely different impacts on learning. Spatial variation represents meaningful task strategies; these diverse solutions should be preserved as they enrich the model’s understanding of the task and help prevent out-of-distribution (OOD) inference. However, velocity variation often introduces unnecessary noise, complicating current action-chunk-based imitation learning by forcing the model to learn these distribution characteristics simultaneously, increasing difficulty without adding substantive strategic value.

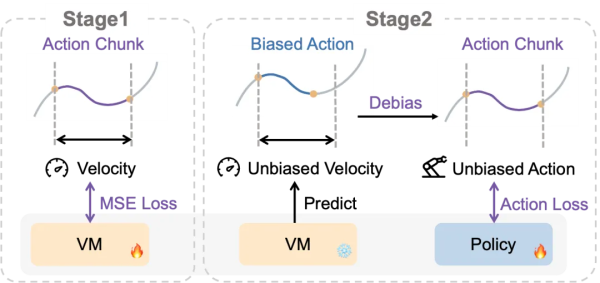

To address this challenge, the team proposed a clever two-stage distribution debiasing framework centered on introducing a Velocity Model (VM). In the first stage, the VM is trained to predict speed from action chunks using an MSE loss, learning the expected speed distribution for each input from the velocity-biased training data. This stage equips the VM with knowledge of reasonable speed distributions corresponding to different action patterns. In the second stage, during policy training, the VM first predicts an unbiased speed for each training sample. This predicted speed is then used to convert the original actions into unbiased actions. The policy is subsequently trained using these unbiased actions as supervision targets, effectively simplifying the distribution complexity and allowing the model to focus on learning the core task strategy without being distracted by speed variations.

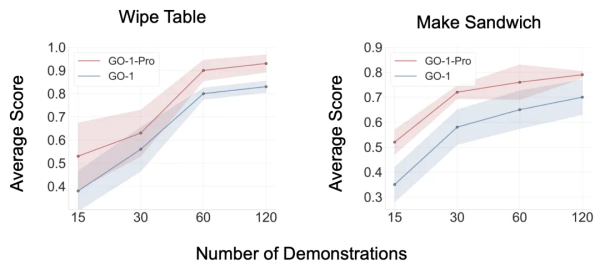

The team validated the distribution debiasing approach on two representative tasks: Wipe Table and Make Sandwich. The model trained on debiased data, named GO-1-Pro, consistently outperformed the standard GO-1 model on both tasks and across all data scales. Notably, GO-1-Pro demonstrated exceptional data efficiency – achieving comparable or superior performance using only half the training data required by GO-1, effectively doubling data utilization efficiency.

The advantages of the debiasing method were particularly pronounced in low-data scenarios. Under the scarce condition of only 15 demonstrations, GO-1-Pro improved performance on the Make Sandwich task by 48% and the Wipe Table task by 39%. In data-scarce settings, multi-modal distributions in speed and space create significant interference, hindering the model’s ability to capture core spatial patterns. By decoupling these confounding factors, the debiasing method enables the model to focus on learning essential spatial relationships, leading to more efficient and robust policy learning even with limited data, providing a practical technical path for enhancing model performance and data efficiency.

This study systematically explores data scaling for robot manipulation, revealing three key insights that challenge conventional wisdom: task diversity is more critical than the quantity of single-task demonstrations; embodiment diversity is not strictly necessary for cross-embodiment transfer; and expert diversity can be detrimental due to velocity multi-modality. These findings overturn the traditional “more diversity is always better” paradigm, proving that quality trumps quantity, and insightful curation trumps blind accumulation. True breakthrough lies not in collecting more data, but in understanding the essence of data, identifying valuable diversity, and eliminating harmful noise, charting a more efficient and precise development path for robot learning.

Media Contact

Organization: Shanghai Zhiyuan Innovation Technology Co., Ltd.

Contact Person: Jocelyn Lee

Website: https://www.zhiyuan-robot.com

Email: Send Email

City: Shanghai

Country:China

Release id:35602

The post Agibot Groundbreaking Release – New Perspectives on Task Embodiment and Expert Data Diversity appeared first on King Newswire. This content is provided by a third-party source.. King Newswire makes no warranties or representations in connection with it. King Newswire is a press release distribution agency and does not endorse or verify the claims made in this release. If you have any complaints or copyright concerns related to this article, please contact the company listed in the ‘Media Contact’ section

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

-

Press Release6 days ago

Dream California Getaway Names Bestselling Author & Fighting Entrepreneur Tony Deoleo Official Spokesperson Unveils Menifee Luxury Retreat

-

Press Release4 days ago

Pool Cover Celebrates Over 10 Years of Service in Potchefstroom as Swimming Pool Cover Market Grows Four Point Nine Percent Annually

-

Press Release3 days ago

Weightloss Clinic Near Me Online Directory USA Launches Nationwide Platform to Help Americans Find Trusted Weight Loss Clinics

-

Press Release6 days ago

James Jara New Book Empowers CTOs and HR Leaders to Build High-Performing Remote Teams Across Latin America

-

Press Release6 days ago

Beyond Keyboards and Mice: ProtoArc Shines at IFA 2025 with Full Ergonomic Ecosystem

-

Press Release2 days ago

La Maisonaire Redefines Luxury Furniture in Dubai with Bespoke Designs for Homes Offices and Hotels

-

Press Release3 days ago

Planner Events Unveils Comprehensive Event Planning Checklist to Transform South African Event Management

-

Press Release6 days ago

Mindful Refines Patient Experience: Clear Education and Professional Guidance for Online Psychiatric Care