Press Release

Sukhbat Lkhagvadorj on The Hidden Bottleneck: How AI is 10x-ing Data Validation

Canton, Michigan, 28th January 2026, ZEX PR WIRE, For decades, businesses have treated data as a rearview mirror, spending millions to answer a single question: What happened last quarter? Today, the challenge isn’t a scarcity of data; it’s a surplus. Companies are drowning in information, and before any of it can be used to build a game-changing predictive model or a dashboard that wows the board, it must pass through the treacherous bottleneck of data validation.

This is the “dirty work” of data science. It’s a well-known industry statistic that data scientists can spend up to 80% of their time just cleaning and preparing data, leaving only 20% for the actual analysis that drives value. This painstaking process has long been a source of frustration, delays, and significant cost. But what if this bottleneck could be transformed into a strategic advantage?

According to Sukhbat Lkhagvadorj, a data engineer with over eight years of experience at major companies like Uber and HBO, a new generation of AI tools is making this possible. “We are witnessing a fundamental shift,” he states. “Agentic AI coding assistants are not just accelerating workflows; they are fundamentally changing how we approach data integrity. This isn’t just about saving time—it’s about building a more reliable foundation for every data-driven decision.”

The “Garbage In, Garbage Out” Crisis

The most sophisticated AI model is worthless if it’s fed corrupted or inconsistent data. This is the “garbage in, garbage out” principle, a problem that has plagued data teams for years. Traditionally, the validation process has been a manual, mind-numbing ordeal involving:

- Writing hundreds of lines of Python scripts to check for basic errors.

- Hard-coding business rules, such as ensuring a value for “age” is a positive integer.

- Endlessly debugging why a simple CSV upload crashed a data pipeline, again.

This approach is not only slow and inefficient but also brittle. A slight change in data format can break an entire script, forcing engineers to start from scratch. It’s a reactive, error-prone cycle that drains resources and stifles innovation.

The Dawn of the AI Data Engineer

The game-changer isn’t just a smarter spellchecker for code. It’s the emergence of agentic AI assistants, like Claude Code, that can function as a proactive partner in the data validation process. Lkhagvadorj explains that these tools operate less like a simple calculator and more like a senior data engineer sitting right beside you.

“Instead of just flagging a syntax error, these AI agents understand the intent behind your data,” he says. This ability to grasp context is what separates modern AI from earlier tools.

Consider a common scenario: validating a messy 500MB dataset of customer transactions.

- The Old Way: A data engineer might spend half a day writing a Python script to check for null values, validate email formats, ensure currency symbols are consistent, and flag impossible transaction dates.

- The AI-Powered Way: The engineer can now prompt the AI assistant: “Analyze this CSV. Write a Python script using Pydantic to validate the schema. Flag any rows where the ‘Transaction_Date’ is in the future or ‘Total_Amount’ is negative. Then, generate a summary report of all detected errors.”

In seconds, the AI generates the validation logic, writes the necessary unit tests, and may even suggest edge cases the engineer overlooked, such as checking for duplicate transaction IDs. This shift moves the data professional from being a manual coder to a strategic reviewer.

Unlocking a 10x Speed Boost in Data Workflows

This massive acceleration in productivity comes from eliminating the “translation layer” between human thought and code execution. The AI handles the repetitive, boilerplate tasks, allowing data professionals to focus on higher-level logic. The improvements are dramatic across the board:

- Schema Definition: Instead of manually writing boilerplate SQL or JSON schemas, an engineer can prompt the AI, “Here is a sample JSON. Generate the strictest possible schema for it.” The task is completed instantly.

- Complex Logic Checks: Rather than coding intricate “if/else” statements for every column, the prompt becomes, “Write a validator ensuring ‘StartDate’ is always before ‘EndDate’ for all rows.” The time savings can be tenfold.

- Refactoring Legacy Code: Modernizing old validation scripts from 2019 is as simple as asking, “Update this script to use the modern Polars library instead of Pandas.”

- Regex Nightmares: The hours once spent crafting complex Regex patterns to validate international phone numbers are replaced by a simple command: “Create a Regex pattern that validates various international phone formats.”

Going Beyond Syntax with Semantic Validation

Perhaps the most profound capability of AI in data validation is its semantic awareness. Standard scripts can check if a cell contains text, but they can’t determine if that text makes sense in context.

Sukhbat Lkhagvadorj highlights this with a powerful example. “An AI tool can look at a column labeled ‘US States’ and flag an entry like ‘Paris’ as an anomaly,” he explains. “It’s not a code error—’Paris’ is a valid string—but it’s contextually incorrect. This level of semantic validation was previously impossible without massive manual oversight.”

This capability extends to identifying subtle inconsistencies that human reviewers might miss. An AI can recognize that a “Job Title” entry of “12345” or “N/A” is anomalous, even if it technically fits the column’s data type. It can understand relationships between columns and flag logical impossibilities, bringing a new layer of intelligence to data quality control.

Adopting Best Practices for an AI-Driven Future

To harness this 10x potential, organizations must adapt their workflows. It requires a shift in mindset from viewing AI as a simple tool to embracing it as a collaborative partner. Lkhagvadorj recommends three key practices:

- Treat AI as a Partner, Not a Stenographer: Don’t just ask the AI to write code. Ask it to critique your approach. Pose questions like, “What potential edge cases am I missing in this validation logic?” or “Suggest a more efficient way to validate this dataset.”

- Maintain a Human-in-the-Loop: AI is incredibly fast, but it is not infallible. Use AI to generate the validation scripts and tests, but always have a human expert review the logic before deploying it into production pipelines. This ensures accuracy and accountability.

- Iterate and Refine in Real-Time: Use terminal-based AI agents to create a continuous, conversational loop. Run a validation script, review the errors, prompt the AI to help fix the data, and re-run the validation—all within minutes.

The New Competitive Edge

The companies that will dominate the next decade won’t just be the ones with the most advanced predictive models; they will be the ones with the cleanest, most reliable data pipelines. By leveraging agentic AI tools for data validation, organizations are not merely saving countless hours of manual coding. They are building a rock-solid foundation for all their analytics and strategic initiatives.

“This is about reallocating your most valuable resource—your data talent,” concludes Sukhbat Lkhagvadorj. “You stop spending your week fixing broken spreadsheets and start spending it discovering the insights that truly matter.” The hidden bottleneck of data validation is finally being transformed into a source of competitive advantage, and the organizations that embrace this shift will be the ones to lead the way.

To learn more visit: https://sukhbatlkhagvadorj.com/

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

Allbridge Announces The New Hybrid Cross-Chain Architecture Combining Native Rails, Liquidity, and Privacy

January 2026 – Allbridge has announced a new cross-chain architecture, designed to unify multiple bridging models into a single routing system that selects the most efficient transfer method per asset, chain pair, and market condition.

After years of operating traditional bridge infrastructure, the team says the industry’s main failures were not technical but user-facing: fragmented assets, unreliable arrival experiences, and dependence on liquidity that introduced hidden costs.

“Users don’t just want to move tokens – they want to move value and be able to act immediately on the destination chain,” said Allbridge’s founder. “The new architecture will be designed around that reality.”

A Hybrid Model Instead of a Single Rail

The new architecture integrates multiple existing transfer models rather than committing to a single architecture:

- Native rails, such as Circle’s CCTP for USDC and USDT’s OFT model, are used where available.

- Liquidity pools and intent-based fulfillment serve as fallbacks for routes where native rails do not yet exist.

- A routing engine dynamically selects the optimal path based on asset type, supported chains, and current market conditions.

According to the company, this approach avoids forcing users into a single ecosystem or stablecoin universe and preserves access across both EVM and non-EVM chains.

Focus on UX

Beyond transfer mechanics, the next Allbridge architecture emphasizes what the company calls the “arrival experience,” including:

- destination gas provisioning,

- fee abstraction,

- automated finalization, and

- routing that avoids dead ends.

“These features are no longer differentiators – they’re requirements,” the team stated. “Without them, multichain still feels like a sequence of technical rituals rather than a single experience.”

Privacy as a Built-In Option

Allbridge new architecture also introduces optional privacy routing inspired by emerging Privacy Pool designs, aimed at improving user protection while remaining compatible with compliance frameworks

Transfers can be routed through dedicated pools with cryptographic commitments, allowing users to reduce public transaction traceability while preserving compliance options through relayer-based context handling.

The company describes this as a “user protection layer” rather than a separate product or a fully opaque system.

Roadmap for the Next Six Months

Allbridge outlined several priorities for the next development phase:

- native-feeling stablecoin routing,

- guaranteed transfer reliability via fallback mechanisms,

- default integration of swap + bridge flows,

- privacy as an opt-in routing mode, and

- continued first-class support for non-EVM chains.

Positioning

Allbridge frames its strategy as “and, not or” – combining architectures rather than replacing them.

“If you think the future of bridging is one rail or one ecosystem, we disagree,” the company said. “Our goal is a system that chooses the right primitive per route, per asset, and per moment – without asking users to become liquidity engineers.”

Media contact:

Company Name: Allbridge

Contact Person: Andrii Velykyi

E-mail: av@allbridge.io

Website: allbridge.io

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

Coinfari Introduces a Unified Digital Platform for Crypto Trading and Market Engagement

New York, Ny – Coinfari, a digital asset trading and financial technology platform, today announced the launch of its unified ecosystem designed to support cryptocurrency trading, market monitoring, and community engagement within a single, streamlined environment. The platform has been developed to address increasing demand for accessible trading infrastructure and transparent market tools as global participation in digital assets continues to expand.

Coinfari brings together trading functionality, real-time market data, and user engagement features through a web-based and mobile-responsive interface. The platform supports multiple digital asset pairs and offers tools intended to accommodate a broad range of user experience levels, from individuals entering the crypto market for the first time to participants seeking more advanced trading capabilities. Its design emphasizes usability, performance stability, and operational clarity.

The launch reflects a broader industry trend toward platforms that integrate execution, analytics, and user interaction rather than relying on fragmented services. By consolidating these elements, Coinfari aims to reduce complexity for users while maintaining the technical depth required for active market participation. Platform development has focused on system reliability, efficient order execution, and clear presentation of market information.

Key components of the Coinfari platform include spot trading functionality, real-time pricing data, and order management tools designed to support informed decision-making. In addition, the platform incorporates engagement features such as user programs and activity-based incentives, which are structured to encourage consistent participation while maintaining a neutral, non-advisory framework. Coinfari does not position its services as financial advice and emphasizes user responsibility and informed participation.

Security and operational integrity remain central considerations in the platform’s architecture. Coinfari employs industry-standard practices related to system monitoring, access controls, and risk management processes to support platform resilience. Ongoing updates and infrastructure enhancements are planned as part of its long-term development roadmap.

Coinfari is structured to serve an international user base and is focused on expanding its operational reach in line with regional market requirements and regulatory considerations. Future updates are expected to include additional market tools, expanded asset coverage, and refinements to user experience based on platform performance and feedback.

More information about Coinfari, its platform features, and ongoing updates is available on the company’s official website.

About Coinfari

Coinfari is a digital finance and cryptocurrency trading platform offering market access, trading tools, and user engagement features within a unified ecosystem. The platform is designed to support transparent market participation and efficient digital asset interaction for a global audience.

Website: https://coinfari.com/

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

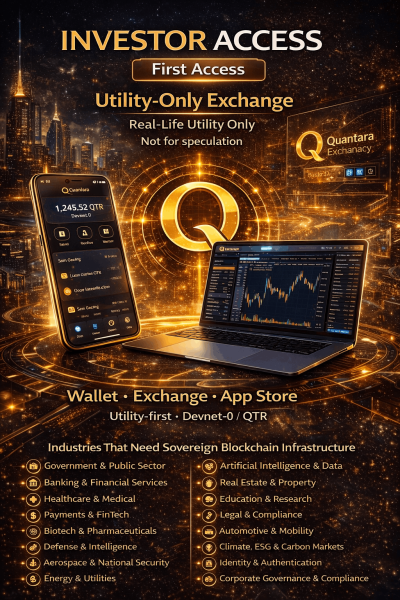

Quantara Announces Availability of Blockchain Infrastructure for Institutional and Public-Sector Applications

United States, 28th Jan 2026, – Quantara has announced the availability of its blockchain infrastructure platform designed for use in institutional, enterprise, and public-sector environments. The platform is intended to support applications that require data integrity, auditability, and long-term operational stability.

The Quantara infrastructure includes a secure digital wallet, an application layer for enterprise and public-sector systems, and a blockchain network designed for extended operational lifecycles. The platform is structured to support settlement processes, system-level transactions, and application-driven economic activity.

According to the company, the infrastructure has been developed for organizations that require predictable system behavior, verifiable records, and cryptographic validation across distributed environments. The platform is designed to operate independently of trading-focused mechanisms and is not positioned as a speculative exchange.

Quantara stated that the infrastructure is intended for use across sectors including government and public administration, banking and financial services, healthcare, energy and utilities, legal and compliance systems, education and research, and data-driven industries.

The company indicated that security and system integrity are central to the platform’s design. The infrastructure incorporates deterministic system architecture and cryptographic verification methods, with a development roadmap that includes support for post-quantum security standards.

Quantara’s platform is being positioned as a foundational technology layer for organizations seeking blockchain-based systems with long-term operational requirements.

Media Contact

Organization: Money Records LLC

Contact

Person: Jay Anthony

Website:

https://www.quantarablockchain.com/

Email:

moneyrecordsllc@gmail.com

Contact Number: 17812520801

Country:United States

The post

Quantara Announces Availability of Blockchain Infrastructure for Institutional and Public-Sector Applications appeared first on

Brand News 24.

It is provided by a third-party content

provider. Brand News 24 makes no

warranties or representations in connection with it.

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

-

Press Release1 week ago

CVMR at the Future Minerals Forum FMF 2026

-

Press Release4 days ago

Knybel Network Launches Focused Growth Campaign to Help Southeast Michigan Buyers and Homeowners Win in a Competitive Housing Market

-

Press Release6 days ago

Valencia Scientology Mission Highlights Volunteer Humanitarian Work in La Llum

-

Press Release6 days ago

Highly Recommended by GoodNight New York: Zeagoo Patterned Shirt Becomes the Focal Point of Early Spring Outfits

-

Press Release4 days ago

New Findings Reveal a Hidden Indoor Air Quality Crisis Linked to Aging HVAC Systems and Fiberglass Ductwork Across South Florida

-

Press Release4 days ago

Stockity Arrives in Indonesia, Bringing Global Markets Closer to Local Traders

-

Press Release4 days ago

Karviva Selected to Meet with Costco Wholesale Southern California Merchants at Upcoming Local Summit

-

Press Release4 days ago

GOD55 Sports Announced as Gold Partner and Official Sports Media Partner for WPC Malaysia Series 2025-26