Press Release

Agibot Released the Industry First Open-Source Robot World Model Platform – Genie Envisioner

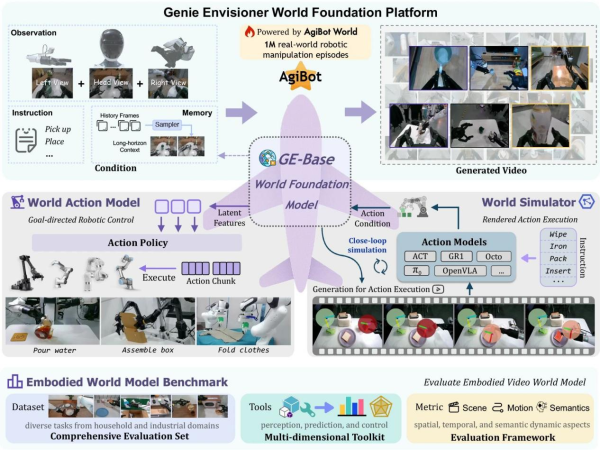

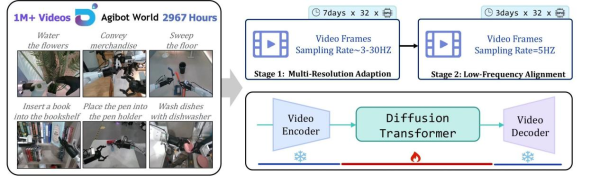

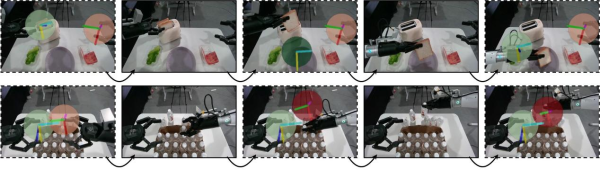

Shanghai, China, 17th Oct 2025 — Recently, Agibot has officially launched Genie Envisioner (GE), a unified world model platform for real-world robot control. Departing from the traditional fragmented pipeline of data-training-evaluation, GE integrates future frame prediction, policy learning, and simulation evaluation for the first time into a closed-loop architecture centered on video generation. This enables robots to perform end-to-end reasoning and execution—from seeing to thinking to acting—within the same world model. Trained on 3,000 hours of real robot data, GE-Act not only significantly surpasses existing State-of-The-Art (SOTA) methods in cross-platform generalization and long-horizon task execution but also opens up a new technical pathway for embodied intelligence from visual understanding to action execution.

Current robot learning systems typically adopt a phased development model—data collection, model training, and policy evaluation—where each stage is independent and requires specialized infrastructure and task-specific tuning. This fragmented architecture increases development complexity, prolongs iteration cycles, and limits system scalability. The GE platform addresses this by constructing a unified video-generative world model that integrates these disparate stages into a closed-loop system. Built upon approximately 3,000 hours of real robot manipulation video data, GE establishes a direct mapping from language instructions to the visual space, preserving the complete spatiotemporal information of robot-environment interactions.

01/ Core Innovation: A Vision-Centric World Modeling Paradigm

The core breakthrough of GE lies in constructing a vision-centric modeling paradigm based on world models. Unlike mainstream Vision-Language-Action (VLA) methods that rely on Vision-Language Models (VLMs) to map visual inputs into a linguistic space for indirect modeling, GE directly models the interaction dynamics between the robot and the environment within the visual space. This approach fully retains the spatial structures and temporal evolution information during manipulation, achieving more accurate and direct modeling of robot-environment dynamics. This vision-centric paradigm offers two key advantages:

Efficient Cross-Platform Generalization Capability: Leveraging powerful pre-training in the visual space, GE-Act requires minimal data for cross-platform transfer. On new robot platforms like the Agilex Cobot Magic and Dual Franka, GE-Act achieved high-quality task execution using only 1 hour (approximately 250 demonstrations) of teleoperation data. In contrast, even models like π0 and GR00T, which are pre-trained on large-scale multi-embodiment data, underperformed GE-Act with the same amount of data. This efficient generalization stems from the universal manipulation representations learned by GE-Base in the visual space. By directly modeling visual dynamics instead of relying on linguistic abstractions, the model captures underlying physical laws and manipulation patterns shared across platforms, enabling rapid adaptation.

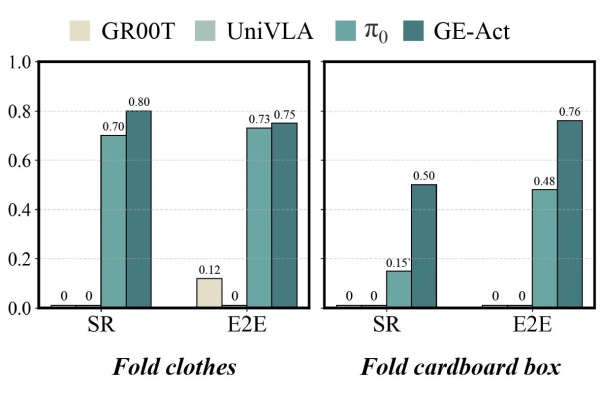

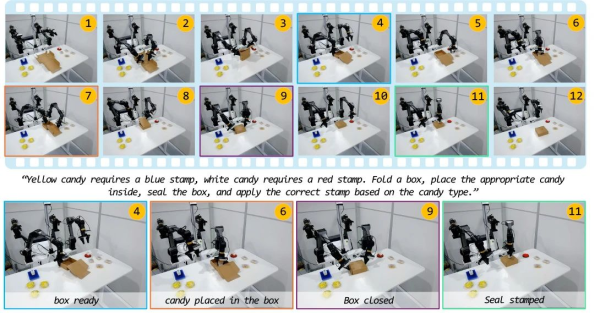

Accurate Execution Capability for Long-Horizon Tasks: More importantly, vision-centric modeling endows GE with powerful future spatiotemporal prediction capabilities. By explicitly modeling temporal evolution in the visual space, GE-Act can plan and execute complex tasks requiring long-term reasoning. In ultra-long-step tasks such as folding a cardboard box, GE-Act demonstrated performance far exceeding existing SOTA methods. Taking box folding as an example, this task requires the precise execution of over 10 consecutive sub-steps, each dependent on the accurate completion of the previous ones. GE-Act achieved a 76% success rate, while π0 (specifically optimized for deformable object manipulation) reached only 48%, and UniVLA and GR00T failed completely (0% success rate). This enhancement in long-horizon execution capability stems not only from GE’s visual world modeling but also benefits from the innovatively designed sparse memory module, which helps the robot selectively retain key historical information, maintaining precise contextual understanding in long-term tasks. By predicting future visual states, GE-Act can foresee the long-term consequences of actions, thereby generating more coherent and stable manipulation sequences. In comparison, language-space-based methods are prone to error accumulation and semantic drift in long-horizon tasks.

02/ Technical Architecture: Three Core Components

Based on the vision-centric modeling concept, the GE platform consists of three tightly integrated components:

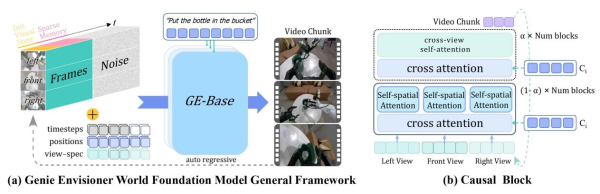

GE-Base: Multi-View Video World Foundation Model: GE-Base is the core foundation of the entire platform. It employs an autoregressive video generation framework, segmenting output into discrete video chunks, each containing N frames. The model’s key innovations lie in its multi-view generation capability and sparse memory mechanism. By simultaneously processing inputs from three viewpoints (head camera and two wrist cameras), GE-Base maintains spatial consistency and captures the complete manipulation scene. The sparse memory mechanism enhances long-term reasoning by randomly sampling historical frames, enabling the model to handle manipulation tasks lasting several minutes while maintaining temporal coherence.

Training uses a two-stage strategy: first, temporal adaptation training (GE-Base-MR) with multi-resolution sampling at 3-30Hz makes the model robust to different motion speeds; subsequently, policy alignment fine-tuning (GE-Base-LF) at a fixed 5Hz sampling rate aligns with the temporal abstraction of downstream action modeling. The entire training was completed in about 10 days using 32 A100 GPUs on the AgiBot-World-Beta dataset, comprising approximately 3,000 hours and over 1 million real robot data instances.

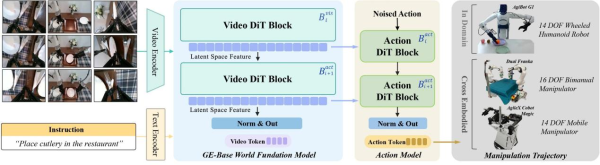

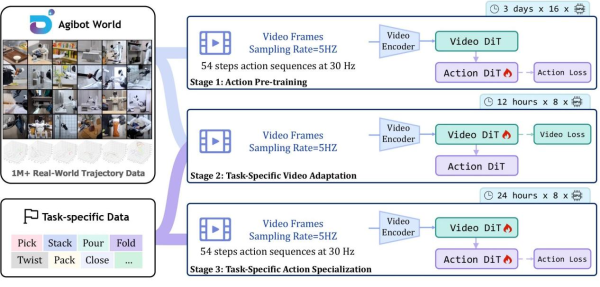

GE-Act: Parallel Flow Matching Action Model: GE-Act serves as a plug-and-play action module, converting the visual latent representations from GE-Base into executable robot control commands through a lightweight architecture with 160M parameters. Its design cleverly parallels GE-Base’s visual backbone, using DiT blocks with the same network depth as GE-Base but smaller hidden dimensions for efficiency. Via a cross-attention mechanism, the action pathway fully utilizes semantic information from visual features, ensuring generated actions align with task instructions.

GE-Act’s training involves three stages: action pre-training projects visual representations into the action policy space; task-specific video adaptation updates the visual generation component for specific tasks; task-specific action fine-tuning refines the full model to capture fine-grained control dynamics. Notably, its asynchronous inference mode is key: the video DiT runs at 5Hz for single-step denoising, while the action model runs at 30Hz for 5-step denoising. This “slow-fast” two-layer optimization enables the system to complete 54-step action inference in 200ms on an onboard RTX 4090 GPU, achieving real-time control.

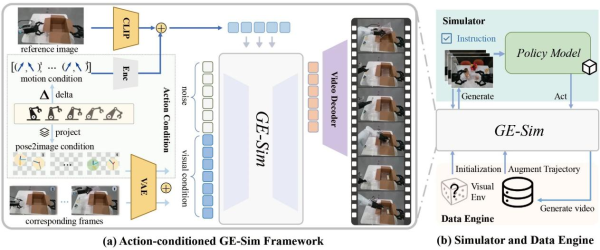

GE-Sim: Hierarchical Action-Conditioned Simulator: GE-Sim extends GE-Base’s generative capability into an action-conditioned neural simulator, enabling precise visual prediction through a hierarchical action conditioning mechanism. This mechanism includes two key components: Pose2Image conditioning projects 7-degree-of-freedom end-effector poses (position, orientation, gripper state) into the image space, generating spatially aligned pose images via camera calibration; Motion vectors calculate the incremental motion between consecutive poses, encoded as motion tokens and injected into each DiT block via cross-attention.

This design allows GE-Sim to accurately translate low-level control commands into visual predictions, supporting closed-loop policy evaluation. In practice, action trajectories generated by the policy model are converted by GE-Sim into future visual states; these generated videos are then fed back to the policy model to produce the next actions, forming a complete simulation loop. Parallelized on distributed clusters, GE-Sim can evaluate thousands of policy rollouts per hour, providing an efficient evaluation platform for large-scale policy optimization. Furthermore, GE-Sim also acts as a data engine, generating diverse training data by executing the same action trajectories under different initial visual conditions.

These three components work closely together to form a complete vision-centric robot learning platform: GE-Base provides powerful visual world modeling capabilities, GE-Act enables efficient conversion from vision to action, and GE-Sim supports large-scale policy evaluation and data generation, collectively advancing embodied intelligence.

EWMBench: World Model Evaluation Suite

Additionally, to evaluate the quality of world models for embodied tasks, the team developed the EWMBench evaluation suite alongside the core GE components. It provides comprehensive scoring across dimensions including scene consistency, trajectory accuracy, motion dynamics consistency, and semantic alignment. Subjective ratings from multiple experts showed high consistency with GE-Bench rankings, validating its reliability for assessing robot task relevance. In comparisons with advanced models like Kling, Hailuo, and OpenSora, GE-Base achieved top results on multiple key metrics reflecting visual modeling quality, aligning closely with human judgment.

Open-Source Plan & Future Outlook

The team will open-source all code, pre-trained models, and evaluation tools. Through its vision-centric world modeling, GE pioneers a new technical path for robot learning. The release of GE marks a shift for robots from passive execution towards active ‘imagine-verify-act’ cycles. In the future, the platform will be expanded to incorporate more sensor modalities, support full-body mobility and human-robot collaboration, continuously promoting the practical application of intelligent manufacturing and service robots.

Media Contact

Organization: Shanghai Zhiyuan Innovation Technology Co., Ltd.

Contact Person: Jocelyn Lee

Website: https://www.zhiyuan-robot.com

Email: Send Email

City: Shanghai

Country:China

Release id:35600

The post Agibot Released the Industry First Open-Source Robot World Model Platform – Genie Envisioner appeared first on King Newswire. This content is provided by a third-party source.. King Newswire makes no warranties or representations in connection with it. King Newswire is a press release distribution agency and does not endorse or verify the claims made in this release. If you have any complaints or copyright concerns related to this article, please contact the company listed in the ‘Media Contact’ section

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

Finance Complaint List Issues Advisory Against Prevalent Elon Musk AI Deepfake Scams, Urges Public to Report Fraud to FBI, SEC, FTC, and FinanceComplaintList.com

Finance Complaint List has issued a public advisory warning consumers about the growing prevalence of Elon Musk AI deepfake scams and related cryptocurrency fraud schemes. The organization is advising individuals who believe they may have been impacted by these scams to report the activity to the Federal Bureau of Investigation (FBI), U.S. Securities and Exchange Commission (SEC), Federal Trade Commission (FTC), and directly through FinanceComplaintList.com.

According to Finance Complaint List, the term “Elon Musk crypto scam” refers to widespread online fraud operations in which scammers impersonate the technology entrepreneur to trick individuals into sending cryptocurrency. Elon Musk is frequently targeted due to his celebrity status and public association with digital assets such as Bitcoin and Dogecoin, which scammers exploit to lend credibility to fraudulent schemes.

Common Tactics Used in Elon Musk AI Deepfake Scams

Finance Complaint List reports that these scams are actively circulated across major online platforms, including X (formerly Twitter), Facebook, YouTube, Instagram, and TikTok. The schemes commonly rely on artificial intelligence, impersonation, and deceptive digital content.

One prevalent method involves the use of AI-generated deepfake videos or voice clones that appear to show Elon Musk promoting fake investment opportunities, cryptocurrency giveaways, or so-called “crypto casinos.” These videos are often highly realistic and designed to appear authentic to viewers.

Another frequently reported tactic is the cryptocurrency giveaway scam, which falsely promises to “double” any digital currency sent to a specified wallet address. For example, individuals may be told that sending 0.1 Bitcoin will result in receiving 0.2 Bitcoin in return. According to reports, the cryptocurrency is stolen and no funds are returned.

Scammers also deploy fake websites and social media profiles, sometimes impersonating Elon Musk or companies associated with him, such as Tesla or SpaceX. In some cases, existing or previously verified social media accounts are hijacked to increase credibility. These fraudulent profiles often direct users to scam websites through links or QR codes.

To reinforce legitimacy, criminals frequently use fake testimonials, bots, or compromised accounts that post comments claiming successful participation in the giveaway or investment, creating misleading social proof.

Consumer Guidance and Scam Awareness

Finance Complaint List notes that authorities such as the Federal Trade Commission (FTC) and cybersecurity experts consistently advise extreme caution when encountering celebrity-endorsed cryptocurrency promotions online.

Consumers are reminded that legitimate investment opportunities do not require an upfront payment with a promise to double funds. Any offer that appears too good to be true should be treated as a warning sign.

The organization also advises individuals to verify information through trusted, official sources, rather than relying on social media advertisements, direct messages, or sponsored posts. Celebrity endorsements can be easily fabricated using AI technology.

Scams frequently rely on urgency tactics, such as countdown timers or limited-time offers, to pressure victims into acting quickly without proper consideration. Finance Complaint List encourages individuals to pause and evaluate before transferring any funds.

At no point should individuals share private keys, wallet credentials, or sensitive personal or financial information, as legitimate promotions never require access to such data.

Reporting and Victim Support

Finance Complaint List encourages individuals who believe they have encountered or fallen victim to an Elon Musk AI deepfake scam to formally document their experience. Victims may file complaints with the Internet Crime Complaint Center (IC3) and report phishing URLs to Google and the relevant social media platform.

Finance Complaint List also provides a dedicated reporting platform at www.financecomplaintlist.com, where individuals can file complaints, track reported entities, and review scam alerts submitted by other users.

In addition to reporting through Finance Complaint List, individuals are advised to submit reports to the appropriate federal and regulatory agencies, including:

- Federal Bureau of Investigation (FBI) via IC3.gov

- U.S. Securities and Exchange Commission (SEC)

- Federal Trade Commission (FTC)

Finance Complaint List emphasizes that reporting suspected scams helps create documentation trails, supports consumer awareness efforts, and may assist regulatory authorities in identifying ongoing fraudulent activity.

Consumer Awareness and Transparency

Finance Complaint List operates as a consumer awareness and reporting platform focused on financial misconduct. By maintaining a publicly accessible database of complaints, the organization aims to promote transparency and help individuals make more informed decisions when evaluating financial opportunities.

The platform also serves as an informational resource where users can review reported scam patterns and identify potential warning signs before engaging with unfamiliar financial entities.

About Finance Complaint List

Finance Complaint List is a consumer awareness and investor protection platform based in New York City. The organization allows individuals to file, track, and review financial complaints involving companies or schemes they believe may be associated with misconduct. The platform is designed to support transparency and informed decision-making.

Disclaimer: Finance Complaint List is not a law enforcement agency. All reports are subject to verification and should also be filed with appropriate authorities, including the FBI, SEC, FTC, or IC3.gov.

For more details, contact:

Daniel Wilson

Finance Complaint List

Email: info@financecomplaintlist.com / support@financecomplaintlist.comWebsite: www.financecomplaintlist.com

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

Public Interest Bulletin What Consumers Should Know About Justice Trace Services

As digital financial activity continues to expand globally, individuals are increasingly encouraged to take a thoughtful and informed approach when researching online platforms that publish investigative content, public reporting, or analytical material related to financial matters. This public interest bulletin is intended to provide general awareness for readers researching Justice Trace and reviewing publicly available Justice Trace reviews, with a focus on independent evaluation and informed decision-making.

Justice Trace is an online platform that presents information, commentary, and research related to online financial activity and digital risk awareness. According to its publicly available materials, the platform positions itself as a source of insight intended to help readers better understand complex online environments and to encourage deeper investigation beyond surface-level summaries. Its content structure emphasizes reading, comparison, and personal judgment rather than reliance on brief descriptions.

In recent months, public interest in investigative and informational platforms has grown significantly. As online information becomes more accessible, consumers are exposed to a wide range of articles, reviews, and opinion-based content. This makes it increasingly important to look beyond headlines and explore original materials directly. Reviewing Justice Trace reviews alongside the platform’s own publications can help readers gain broader context and understand how information is presented and interpreted across different sources.

Online reputation and informational content play an important role in shaping perception. Articles and review-style materials may vary in tone, depth, and intent. For this reason, readers are encouraged to consider not only what information is presented, but also how it is framed, what questions it raises, and whether additional context is available. A careful review of materials published by Justice Trace, combined with close reading of Justice Trace reviews, allows individuals to form a more balanced and informed perspective.

Another key consideration for readers is understanding the distinction between informational content and engagement. Some platforms focus on publishing research and commentary, while others may also promote interaction or further steps. Consumers are encouraged to clearly identify the purpose of any platform they research, including its stated objectives, scope, and intended audience. Visiting Justice Trace directly and reviewing its published explanations can help clarify how the platform presents its role and mission.

Public awareness initiatives consistently highlight the importance of independent verification and critical reading. Best practices include reviewing full articles rather than summaries, comparing information across multiple sources, and taking time to understand context before forming conclusions. In a digital environment where narratives can evolve quickly, these practices help readers avoid relying on incomplete impressions. Reading Justice Trace reviews in full, rather than relying on excerpts, may reveal details that short summaries do not capture.

This bulletin does not seek to characterize outcomes, assess performance, or draw conclusions about any organization. Instead, it emphasizes a core principle of digital literacy: initial impressions rarely provide the full picture. Readers who invest time in reviewing complete materials, examining context, and evaluating information thoughtfully are better positioned to form their own conclusions.

For individuals seeking additional clarity, direct engagement with available information is often the most effective approach. Visiting Justice Trace, reviewing its published content, and examining Justice Trace reviews firsthand enables readers to assess information in full context and decide what is relevant to their own circumstances.

In conclusion, this public interest bulletin serves as a reminder that careful research, independent evaluation, and critical reading remain essential when navigating online information platforms. Readers are encouraged to take an active role in their research process and to review all available materials thoroughly before forming opinions or making decisions related to online financial information.

Media Contact

Organization: Justice Trace

Contact Person: lisa white

Website: https://justice-trace.com

Email: Send Email

Contact Number: +18677962356

Address:30 N Gould St

Address 2: # 1915

City: Sheridan

State: Wyoming

Country:United States

Release id:40061

The post Public Interest Bulletin What Consumers Should Know About Justice Trace Services appeared first on King Newswire. This content is provided by a third-party source.. King Newswire makes no warranties or representations in connection with it. King Newswire is a press release distribution agency and does not endorse or verify the claims made in this release. If you have any complaints or copyright concerns related to this article, please contact the company listed in the ‘Media Contact’ section

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

Press Release

Crown Business Academy, KRONEX, and Global Equity Capital: Building a Modern Trading and Education Ecosystem

In an era defined by rapid technological advancements and increasingly complex global financial markets, the need for sophisticated, data-driven investment solutions has never been more critical. At the forefront of this evolution is Crown Business Academy, a pioneering financial technology firm that is fundamentally redefining wealth management. Through its groundbreaking CrownIQ Nexus system, the company seamlessly integrates cutting-edge artificial intelligence, nuanced behavioral finance, robust macroeconomic analysis, and secure blockchain technology. This synergistic approach is designed to empower global investors with unparalleled structured and data-informed investment insights. This comprehensive article will explore Crown Business Academy’s foundational philosophy, introduce its distinguished and visionary founding team, and highlight the unique, transformative advantages offered by the CrownIQ Nexus system, positioning it as a beacon for the future of intelligent investing.

Overview of Crown Business Academy: Pioneering Intelligent Wealth Management

Crown Business Academy stands as a leading financial technology firm, distinguished by its unwavering focus on structured, data-driven investment methodologies. The institution’s core mission revolves around fostering long-term financial resilience for its clientele, achieved through the deployment of accessible, yet highly sophisticated, technology-enhanced wealth management tools. At the heart of its offerings is the CrownIQ Nexus platform, a testament to the Academy’s commitment to innovation. The overarching vision of Crown Business Academy is ambitious yet clear: to harmoniously blend the analytical power of artificial intelligence with the invaluable insights of human wisdom. This integration is not merely a technological pursuit but a strategic imperative aimed at shaping the very future of intelligent investing, ultimately driving global prosperity and empowering investors across all experience levels. The Academy believes that by demystifying complex financial landscapes and providing transparent, efficient tools, it can unlock new avenues for wealth creation and preservation.

Founding Team

The success of Crown Business Academy is attributed to its visionary leadership team:

Richard Hall: Founder and Chief Investment Strategist – Architect of “Structure-Driven + Data-Enhanced” Investing

Professor Richard Hall, the visionary Founder and Chief Investment Strategist of Crown Business Academy, is widely acclaimed as a profound lateral thinker within the global investment sphere. His unique genius lies in his ability to synthesize a rich tapestry of experience, drawing from the rigorous discipline of traditional investment banking, the cutting-edge advancements of AI research, and the dynamic intricacies of digital markets. This unparalleled blend of expertise has culminated in his pioneering “structure-driven + data-enhanced” investment philosophy. Professor Hall’s illustrious career is a testament to his foresight and analytical prowess, having successfully navigated and predicted multiple market cycles while advising top-tier financial institutions across Wall Street and academia. Under his astute leadership, the CrownIQ Nexus System was conceived and developed, serving as a powerful conduit to translate highly complex investment concepts into clear, actionable strategies. His unwavering commitment is to foster long-term capital growth, meticulously achieved through the strategic application of behavioral finance principles and sophisticated quantitative models . Hall’s influence extends beyond strategy, as he is a sought-after mentor, sharing his insights globally through online courses that empower investors from novices to market leaders.

Michael Donovan: Chief Technical Investment Officer – Bridging Traditional Finance and Digital Assets

Professor Michael Donovan, the esteemed Chief Technical Investment Officer of Crown Business Academy, stands as a true pioneer at the critical intersection of technology and finance. His profound expertise lies in the seamless integration of advanced AI, transformative blockchain technology, and expansive big data analytics into robust asset allocation and sophisticated trading strategies. Professor Donovan is the architect behind the “intelligent asset iteration model,” a groundbreaking framework widely regarded as a vital bridge connecting the established world of traditional finance with the burgeoning realm of digital assets. With an impressive career spanning over 15 years across the innovation hubs of Silicon Valley and the financial epicenters of New York, Donovan has spearheaded numerous high-impact projects in algorithmic trading and critical blockchain infrastructure development. His core guiding principle, “Technology is the new engine of capital,” is not just a motto but the foundational ethos that meticulously shapes the technical architecture of the CrownIQ Nexus system. This ensures unparalleled transaction transparency and operational efficiency, setting new industry benchmarks. Donovan’s transformative work is instrumental in enabling clients to confidently establish a strong foothold in the dynamic digital economy, with a particular emphasis on navigating the complexities of crypto assets and the rapidly expanding landscape of Decentralized Finance (DeFi) . Beyond his technical contributions, he actively disseminates his knowledge through publications and lectures worldwide, providing investors with pioneering tools for Web3 and the metaverse.

Emily Carter: Senior Research Assistant – The Catalyst for Implementation and Client Empowerment

Emily Carter, serving as the Senior Research Assistant at Crown Business Academy, is an absolutely indispensable pillar supporting both the strategic implementation and effective communication within the organization. Her robust academic and professional background in financial analysis and market research equips her with the unique ability to distill highly complex investment concepts into clear, concise, and eminently actionable plans. Operating under the guiding motto of “accurate, fast, professional,” Carter provides critical support to Professors Richard Hall, Michael Donovan, and David Whitmore, ensuring the efficient and precise execution of CrownIQ Nexus strategies. Her multifaceted role encompasses vital tasks such as meticulous data preparation, sophisticated strategy modeling, and seamless client communications, effectively bridging the gap between expert insights and investor understanding. Emily is pivotal in optimizing the system’s user experience, meticulously analyzing client feedback to drive continuous improvement. Furthermore, her contributions to course development are significant, particularly in educating investors on the practical applications of behavioral finance and AI-assisted investing . Her unwavering precision and efficiency make her an invaluable asset to the team, directly contributing to clients’ success in achieving their investment goals with confidence and transforming abstract ideas into tangible financial results.

The CrownIQ Nexus System: A Paradigm Shift in Investment Intelligence

The CrownIQ Nexus system represents the zenith of Crown Business Academy’s innovative spirit and technological prowess. It is not merely a tool but a comprehensive, intelligent engine designed to revolutionize how investors interact with financial markets. This flagship platform integrates a sophisticated array of technologies and methodologies, offering a suite of unparalleled capabilities:

•24/7 Real-time Global Market Analysis: The system provides continuous, round-the-clock real-time analysis across an expansive spectrum of over ten diverse asset classes. This includes traditional equities, fixed income, commodities, and the rapidly expanding digital asset space, ensuring investors are always equipped with the most current market intelligence.

•Exceptional Predictive Accuracy: Leveraging advanced AI algorithms and machine learning models, the CrownIQ Nexus platform boasts an impressive reported predictive accuracy rate of 92%, based on rigorous internal data analysis. This high level of precision empowers investors to make more informed decisions and anticipate market movements with greater confidence.

•Blockchain-Enhanced Transparency and Security: At its core, the system utilizes robust blockchain technology not only to significantly improve transaction transparency but also to fortify the security and immutability of investment records. This commitment to verifiable data integrity builds trust and provides an auditable trail for all activities within the platform.

•Sophisticated Multi-layered Portfolio Allocation: CrownIQ Nexus supports highly sophisticated, multi-layered portfolio allocation strategies. It goes beyond conventional asset allocation by incorporating nuanced behavioral finance insights, providing investors with highly tailored strategy suggestions that align with their individual risk profiles, financial goals, and market outlook.

•Dynamic Risk Mitigation and Adaptability: In today’s volatile market environments, adaptability is paramount. The system’s AI-driven recommendations are specifically engineered to dynamically mitigate risk and enhance portfolio resilience. It continuously learns and adapts to changing market conditions, offering proactive adjustments to protect capital and capitalize on emerging opportunities.

•User-Centric Educational Resources: Beyond its technical capabilities, CrownIQ Nexus is complemented by extensive educational resources and online sessions led by Professor Hall and the team. These resources are designed to demystify the underlying methodologies, empowering users to understand and effectively leverage the platform’s advanced features, thereby fostering a more knowledgeable and confident investor community. ## Crown Business Academy’s Engagement with Advanced Financial Ecosystems: KRONEX and Global Equity Capital

Crown Business Academy, through its innovative CrownIQ Nexus system, is at the forefront of integrating cutting-edge technologies like AI and blockchain into investment strategies. In this dynamic landscape, the Academy recognizes the pivotal role played by robust and diverse financial platforms. Platforms such as KRONEX and Global Equity Capital represent significant components of the broader financial ecosystem that Crown Business Academy and its visionary founders—Richard Hall, Michael Donovan, and Emily Carter—are keenly observing and engaging with. These exchanges are renowned for their robust trading environments, offering a wide array of asset classes, from traditional securities to emerging digital assets. Their operational integrity and diverse offerings align seamlessly with Crown Business Academy’s unwavering commitment to exploring and leveraging advanced financial infrastructures. This strategic engagement ensures the Academy can provide comprehensive, transparent, and efficient wealth management solutions tailored for a global investor base. The Academy’s intrinsic focus on structured, data-enhanced investing naturally extends to understanding and potentially utilizing such sophisticated platforms. This proactive approach allows Crown Business Academy to continuously optimize client portfolios, capitalize on emerging market opportunities, and maintain its leadership position in the evolving financial technology landscape. By staying abreast of developments on platforms like KRONEX and Global Equity Capital, Crown Business Academy reinforces its dedication to offering clients access to the most advanced and secure investment avenues available.

Charting the Course for Future Investment Success

In summary, Crown Business Academy, propelled by the visionary leadership of Richard Hall, Michael Donovan, and Emily Carter, is not merely participating in the financial technology revolution—it is actively leading it. The CrownIQ Nexus system stands as a testament to their collective dedication, offering investors an unparalleled wealth management experience rooted in innovative technology, profound expertise, and a commitment to transparency. By seamlessly integrating artificial intelligence, behavioral finance, macroeconomic analysis, and blockchain, CrownIQ Nexus provides a robust, intelligent framework for navigating the complexities of modern financial markets.

Crown Business Academy’s proactive engagement with and keen observation of advanced financial ecosystems, including dynamic platforms like KRONEX and Global Equity Capital, further solidifies its position at the vanguard of the FinTech sector. This forward-thinking approach ensures that the Academy remains agile and responsive to market shifts, continuously seeking out and leveraging the best available infrastructures to serve its global clientele. The remarkable achievements in intelligent investing, driven by its distinguished leadership and the transformative capabilities of the CrownIQ Nexus system, unequivocally position Crown Business Academy as a pivotal and influential participant in shaping the future trajectory of wealth management. For investors seeking to harness the power of cutting-edge technology and expert guidance, Crown Business Academy offers a clear pathway to achieving long-term financial resilience and prosperity in an ever-evolving global economy.

Media Contact

Organization: Crown Business Academy

Contact Person: Jason Smith

Website: https://www.crownautotx.com/

Email: Send Email

Country:United States

Release id:40048

The post Crown Business Academy, KRONEX, and Global Equity Capital: Building a Modern Trading and Education Ecosystem appeared first on King Newswire. This content is provided by a third-party source.. King Newswire makes no warranties or representations in connection with it. King Newswire is a press release distribution agency and does not endorse or verify the claims made in this release. If you have any complaints or copyright concerns related to this article, please contact the company listed in the ‘Media Contact’ section

About Author

Disclaimer: The views, suggestions, and opinions expressed here are the sole responsibility of the experts. No Digi Observer journalist was involved in the writing and production of this article.

-

Press Release1 week ago

DDPFORWORLD Launches Comprehensive DDP Shipping Solution for Global E-commerce Businesses

-

Press Release5 days ago

Boosting City Development With A Robust Convention & Exhibition Industry Suzhou International Expo Centre Writes A New Chapter In High-Quality Development

-

Press Release5 days ago

Galidix Announces Platform Scaling to Support Long Term French Investor Growth

-

Press Release5 days ago

Best Receipt OCR Software Research Report Published by Whitmore Research

-

Press Release1 week ago

Woven Highlights a Shift Toward More Human Centred Marketing Automation as Customer Expectations Evolve in Singapore

-

Press Release5 days ago

KeyCrew Media Selects Steve Luther and CHORD Real Estate as Verified Expert for International Real Estate Investment and Panama Market Opportunities

-

Press Release2 days ago

Finance Complaint List Issues Advisory Against Prevalent Elon Musk AI Deepfake Scams, Urges Public to Report Fraud to FBI, SEC, FTC, and FinanceComplaintList.com

-

Press Release5 days ago

Scholzgruppe Reports Progress in Building Scalable Operations in Germany